Stay tuned

With a lot more work to do in this space, TTC Labs is exploring ways to support and encourage sensible, unified approaches to AI accountability.

How can we empower people in their interactions with AI systems?

AI-driven products and systems are becoming increasingly widespread. More people are interacting with AI every day, knowingly or otherwise. As we progress, we should continue to develop processes, governance frameworks, and regulations to ensure that AI systems are as trustworthy as possible. We also need to empower people to decide for themselves whether to trust an AI system in any given context.

We believe that there are at least three approaches to help with this:

1. Help people to better understand AI, by explaining when and how they are interacting with an AI system, and being transparent about the decisions it makes and the outcomes it produces;

2. Give people meaningful control over their relationship with an AI system, giving them choices and agency where it makes sense to do so;

3. Give people confidence that there are checks and balances in place, much like in other parts of society, by developing processes and mechanisms of accountability.

TTC Labs has been bringing together industry, policymakers and experts in an effort to understand how we can develop more sustainable relationships between AI-driven products and the people who use them.

Providing transparency and explainability of artificial intelligence (AI) presents complex challenges across industry and society, raising questions around how to build confidence and empower people in their use of digital products.

The purpose of this report is to contribute to collaborative, cross-sector efforts to address these questions. It shares the key findings of a project conducted in 2021 between TTC Labs and Open Loop in collaboration with the Singapore Infocomm Media Development Authority (IMDA) and Personal Data Protection Commission (PDPC). Through this project we have developed a series of operational insights and guidance for bringing greater transparency to AI-powered products.

Download the reportUnpack key terms related to trustworthy AI with this interactive tool

View AI GlossaryTrustworthy AI Experiences

Understanding AI

How can we empower people to make informed decisions about trusting an AI system?

When people don’t understand how an AI-driven service comes up with results, they may feel less able to discern whether or not to trust it. We therefore need to design mechanisms to explain to people when, how and why they are affected by AI systems, to help support greater comprehension of AI

These mechanisms should drive practical understanding and agency for everybody, not just for the narrow few. That means the explanations must be provided in a way that caters for non-experts, and gives people the information they need to make a reasonably informed judgement.

The goal is not product explanations, but people’s understanding.

Through our interactive workshops and research, we’ve found that people draw on their wider knowledge and experience to develop their understanding of an AI in an active manner. Their understanding can change depending on how a product behaves and surfaces results, as people situate explanations within the context of their experience. This means that we must adopt a more interactive concept of explainability, moving beyond the idea that product makers provide explanations and product users merely receive them. Product makers can take a more collaborative approach to explainability through the use of interactive touchpoints, user controls and implicit explainability mechanisms.

“

I like being able to open a new app and just understand how to use it, without long instructions.

Antonio

“

I don't know why some articles are surfaced over others, but I'm ok with that.

Rochelle

Comprehensive transparency and highly detailed explanations aren’t always useful, desired, or even possible. The key to creating positive user experiences is knowing how much to reveal and when.

Product makers need to ensure people are provided with the information most relevant to their respective needs and contexts. They also need to consider how explainability is balanced between different audiences, such as general product users and expert stakeholders, and acknowledge the trade-offs that come with this. When a product serves different user groups, the information and control appropriate to each can vary significantly.

It also means accounting for the fact that different people engage with information in different ways, by designing multiple opportunities for a user to develop understanding in a way that is meaningful to them.

Trustworthy AI Experiences

In Control of AI

Control is about giving people meaningful agency over their relationship with an AI-powered system.

Controls take many forms; they include mechanisms that allow people to influence the way an AI-powered product generates results, to modify their declared interests and preferences, or to override or remove AI-inferred data inputs. They also include opportunities for people to provide feedback on recommendations and to challenge AI outcomes and explanations if they disagree with them.

Comprehensive control is not necessarily possible or desirable, but appropriate controls can enhance people’s understanding of AI systems. There is more work to be done to identify and develop user controls that can improve transparency and explainability of AI systems for different audiences.

Control is about empowering people over their interactions with AI systems. The relative complexity of AI means explaining how and why a system uses information, such as someone’s personal data, is not always straightforward.

And giving people a lot of information about how their data might be used is not the same as meaningful control – it outsources responsibility to the user, instead of the product or system maker.

Meaningful agency means control over inputs, and the ability to feedback into AI processes. It may also include a choice of outputs of AI systems.

“

When there are tons of pop-ups or big blocks of text, I just click through.

Rochelle

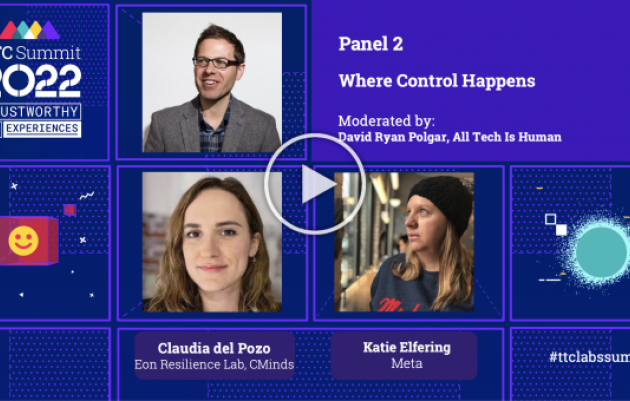

Panel Discussion: Where Control Happens

David Polgar moderated this panel discussion at the TTC Summit, with Claudia del Pozo from CMinds and Katie Elfering from Meta, discussing Control and AI

Watch now

Trustworthy AI Experiences

Accountability

Accountability focuses on tracing and verifying system outputs and actions. It involves being clear about which parts of a system a person is accountable for and to whom they are accountable.

Controls take many forms; they include mechanisms that allow people to influence the way an AI-powered product generates results, to modify their declared interests and preferences, or to override or remove AI-inferred data inputs. They also include opportunities for people to provide feedback on recommendations and to challenge AI outcomes and explanations if they disagree with them.

Comprehensive control is not necessarily possible or desirable, but appropriate controls can enhance people’s understanding of AI systems. There is more work to be done to identify and develop user controls that can improve transparency and explainability of AI systems for different audiences.

Policymakers around the world are increasingly advocating for cross-sectoral AI accountability and transparency. TTC Labs supports this push, and the need to work closely with industry to create mechanisms which are both meaningful and pragmatic.

Developing an AI system, much like producing a new drug or building an aeroplane, can involve numerous people, and even different companies. For the end user to be able to use a product confidently, they need to feel sure that there is appropriate oversight of the development process.

For AI systems, one way of doing this is to map the person or entity who is responsible for each part of the system across the development lifecycle, and to whom they are accountable.

Stay tuned

With a lot more work to do in this space, TTC Labs is exploring ways to support and encourage sensible, unified approaches to AI accountability.

Are you working in this space? We’d Love to hear from you!

Our explorations on this topic are just a beginning. These challenges require more work, and more insight from the wider community.

Get in touchPassionate about AI Explainability?

Don’t miss any news, reports, or research we publish in this area — sign up to the TTC Labs email list and we’ll keep you in the loop with monthly emails.