Abstract

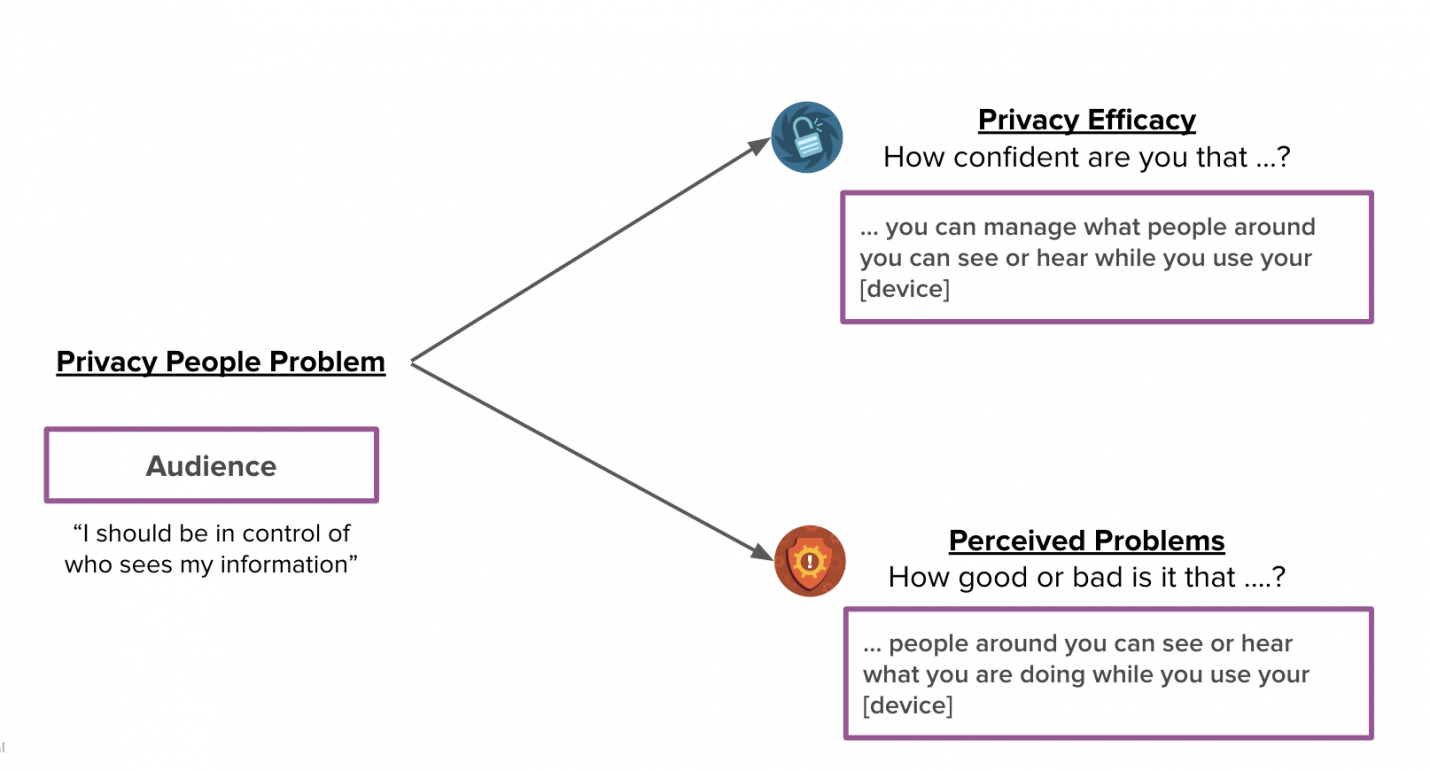

Product experiences that address people’s perceived privacy problems can build “privacy efficacy,” or users’ confidence in privacy. Across multiple studies, we found that respondents who had higher privacy efficacy were more likely to trust and use Augmented Reality (AR) smart glasses and Mixed Reality (MR) headsets.

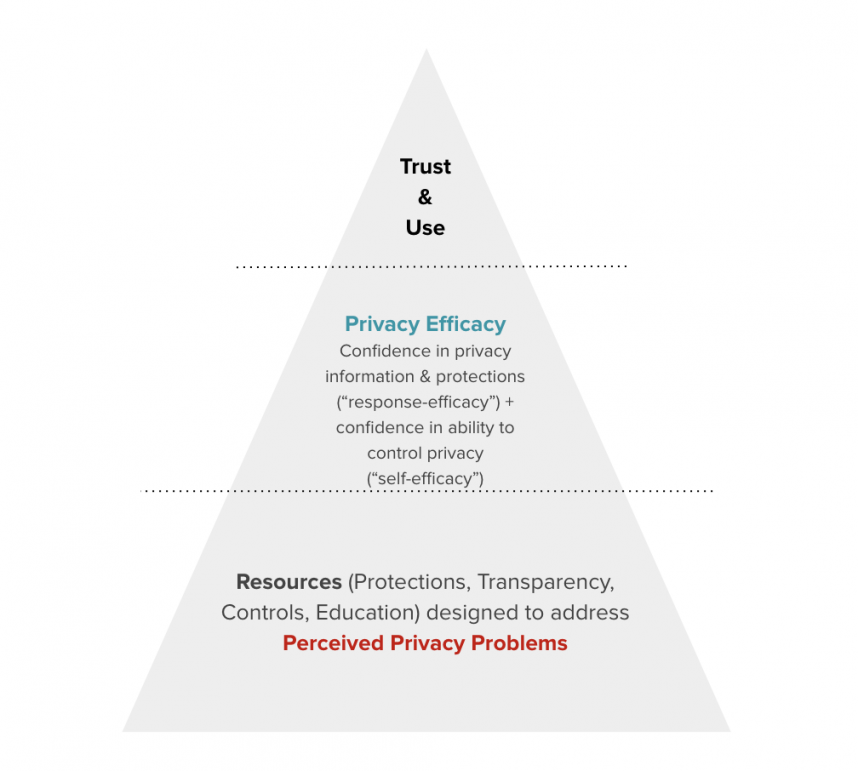

We built a Trust Framework to help product developers understand how proactively designing for privacy may earn people’s trust and facilitate their use of AR/MR products.

Report

Some people believe that using AR/MR devices like headsets and smart glasses comes with certain privacy risks. For example, some people may worry about what their data is being used for or that the privacy of people who are in their surroundings may be affected by use of the device. For companies like Meta that build AR/MR products, it's important to understand these beliefs and then to identify opportunities to address them. At Meta, we've been exploring whether there's an opportunity for AR/MR product manufacturers to build users' confidence in their privacy ("privacy efficacy") by addressing users’ potential privacy concerns (“perceived privacy problems”). In this article, we summarize research on the relationship between privacy efficacy and trust and use of AR/MR products.

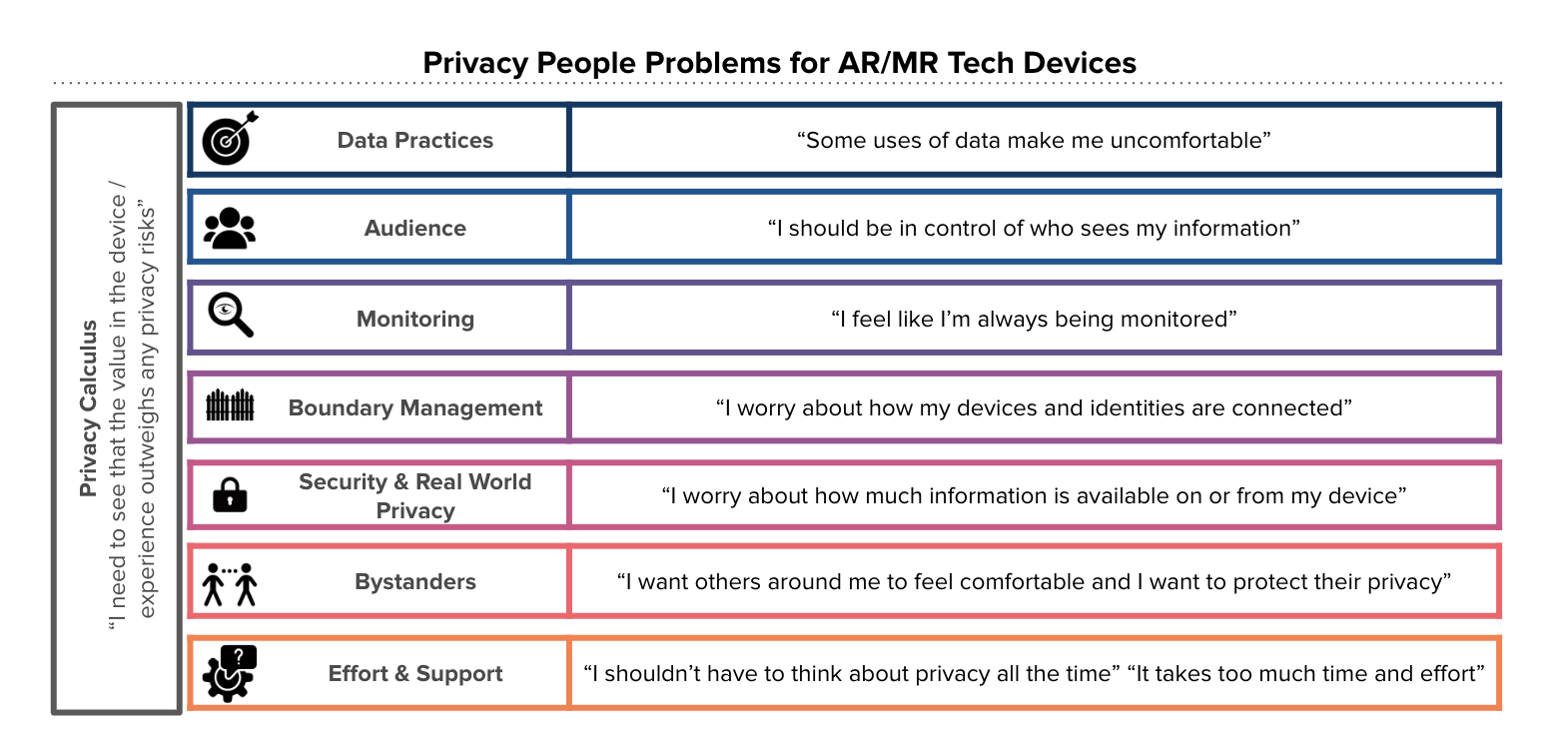

Perceived Privacy Problems in AR/MR Products

Perceived privacy problems refers to the privacy risks some people might believe are associated with product use. Social media and messaging app user respondents have reported concerns related to data collection and use, data access, and interpersonal privacy (Hepler & Blasiola, 2021). However, people’s perceived privacy concerns about new hardware devices – like an MR headset or AR smart glasses – may be different from those for social apps because:

- People may lack familiarity with novel products that deploy old technologies in new ways (e.g., devices that place cameras on a person’s face) or that collect and use new and sensitive data types (e.g., eye or hand tracking data to make the products function).

- These devices can be used in public settings, which may create bystander privacy concerns (e.g., worries about the privacy of people in the surroundings) given that social norms around the use of these devices are still developing.

- Some of these devices have not yet entered the mainstream and as a result privacy considerations could be a more salient factor for product adoption.

- When people pay money for a device or an application, they may have different expectations about data collection and use because they have directly compensated the company/developer.

Based on this emerging landscape, Meta researchers reviewed internal research and identified Privacy People Problem statements for new hardware technologies in the AR/MR space. These statements are based on research participant feedback, and reflect a range of perceived privacy problems people might associate with use of AR/MR products.

Resources that address Perceived Privacy Problems build Privacy Efficacy

Companies making new tech devices can provide resources to support and empower people who perceive privacy problems with device use. For example, here are four types of resources that companies could make available to users that may help address such users’ perceived privacy problems:

- Privacy protections (e.g., hardware like LED signaling for bystanders)

- Disclosures / Transparency (e.g., information about data collection or data use)

- Controls (e.g., clear consents, easy-to-use privacy settings)

- Education (e.g., additional content aimed at educating users on privacy features).

Product experiences that address users’ perceived privacy problems can help build “privacy efficacy,” or users’ confidence in privacy. This includes “response-efficacy,” or people’s confidence that the resources available to them will be effective at addressing their perceived problems and “self-efficacy,” or people’s confidence in their ability to manage their privacy. When product teams develop resources to address perceived privacy problems, it can lead to an increase in privacy efficacy. For example:

- Guided education about privacy settings that addressed data and audience perceived problems increased Messenger user respondents’ privacy efficacy (Menon, 2022).

- Context screens that provided additional information about apps' requests for camera, microphone, or other system information increased Facebook user respondents’ confidence in their privacy decisions and were preferred over presenting system permissions without context screens (Talbott, 2023).

There are many reasons companies might develop product experiences that increase users’ confidence in privacy. In addition to such considerations as improving the overall user experience or responding to legal requirements, supporting users’ privacy efficacy may also lead to positive business outcomes. Below, we describe the research that supports this claim.

Our Research

We developed survey measures of privacy efficacy and perceived privacy problems, based on the Privacy People Problems for new AR/MR tech devices.

We then explored the relationship between research respondents’ privacy perceptions, trust, and use in studies across different subsets of AR/MR products and markets. As described in the table below, our findings consistently showed that respondents who reported having higher privacy efficacy were more likely to:

- adjust their settings, download and purchase apps in the Quest Store, engage with other users in MR, and use their Meta Quest MR Headset; and,

- feel that AR/MR products are trustworthy and report stronger intentions to purchase or recommend these products.

|

Study |

Sample |

Method |

Key Findings |

|

Relationship between Privacy Efficacy and User Behavior Respondents who reported having higher privacy efficacy were more likely to adjust their settings, download and purchase apps in the Quest Store, engage with other users in MR, and use their Meta Quest MR Headset |

|||

|

Study 1 |

N = 4568 Meta Quest user respondents in the US |

In a pilot study we delivered a short online survey on the Meta Quest mobile app in August 2022. The survey included two questions assessing privacy efficacy. Respondents’ survey answers were joined with their app downloads and purchases in the Quest Store and sharing (sending messages, links, images, videos) over a 90-day period. |

Privacy efficacy significantly predicted more app downloads, app purchases and sharing behavior (p < .05, applying a correction for number of tests conducted). |

|

Study 2 |

N = 1598 Meta Quest user respondents in the US and UK |

In a follow-up to Study 1, we conducted a comprehensive exploration of the relationship between user respondents’ perceived data use problems, privacy efficacy and user behaviors in August 2023. Respondents’ survey answers were joined with their log behaviors. Four types of behaviors were included for study: Quest Store (e.g., app downloads, purchases), social (e.g., follows/followers, media sharing from the phone/headset), settings (e.g., discoverability, Horizon Profile), and overall product use (e.g., used the device in the last 28 days, time spent). |

When user respondents perceived problems with the use of their data for social and ads purposes they were significantly less likely to be engaged in VR across product use, store behavior, social behavior, and settings use (p < .05, applying a correction for number of correlations tested) Respondents’ privacy efficacy was significantly correlated with 24 of 34 behaviors (71%) across product use, store behavior, social engagement, and settings use (p < .05, applying a correction for number of correlations tested). These correlations persisted when controlling for user respondents’ demographics, tenure, and respondents’ stated confidence in using new smart devices. |

|

Relationship between Privacy Efficacy and Trust Respondents who reported having higher privacy efficacy were more likely to feel that AR/MR products are trustworthy and report they intend to purchase or recommend these products. |

|||

|

Study 3 |

N = 1209 VR user respondents (Meta Quest n = 568, Non-Meta Quest n = 641) in the US and UK |

We conducted an online survey in September 2022 to understand how privacy perceptions might be related to respondents’ intention to recommend the device to friends and family. A measure of brand trust (perception of Meta’s trustworthiness) was included in the study. |

In hierarchical regression analyses, user respondents’ privacy efficacy and perceived privacy problems each predicted intention to recommend the device (p < .05) with privacy efficacy being the stronger predictor. These relationships persisted even after brand trust was statistically controlled. Comparison of standardized coefficients showed that respondents’ privacy efficacy was the strongest predictor: 1.8x more than respondents’ brand trust and 4.75x stronger than respondents’ perceived privacy problems. |

|

Study 4 |

N = 618 Ray-Ban Stories smart glasses user respondents in the US |

In early 2023, we conducted an online survey on the device’s companion smartphone app (Facebook View). We asked questions about brand trust, privacy efficacy, social acceptability, and confidence in responsible use. |

In multiple regression analyses, social acceptability, privacy efficacy, and confidence in responsible use around others were each significant predictors (p < .05) of respondents’ intent to recommend Ray-Ban Stories to others—even when brand trust was statistically controlled. |

|

Study 5 |

N = 28001 Smart/AR Glasses non-user respondents in the US, UK, Ireland, Germany, Austria, Italy, and France |

In June/July 2022, we conducted an online survey to understand user and bystander expectations around privacy and social acceptability for Smart/AR Glasses across a variety of contexts, data capture types, and use case scenarios. We explored the link between privacy & trust and purchase intent for smart glasses from both (a) a “user perspective” with 1,000 respondents from each country who fit an early adopter profile for new tech devices and (b) a “bystander perspective” with 3,000 respondents from each country who reflected the broader general population. |

Respondents’ confidence that Smart/AR Glasses would have features that can protect privacy was strongly correlated with greater trust in tested companies (p < .05) and stronger intention to buy Smart/AR Glasses from those companies (p < .05), including from Meta. |

|

Study 6 |

N = 284 smart glasses non-user respondents in the US |

In May 2022, we conducted an online survey on privacy attitudes towards new tech and smart glasses. The survey included measures of privacy perceptions, social acceptability perceptions, and interest in purchasing smart glasses. |

Respondents’ feelings of confidence about using settings to protect their privacy on new tech devices (self-efficacy) were linked to greater interest in purchasing smart glasses, but primarily for respondents who did not self-identify as early adopters. Those who felt comfortable with the idea of using smart glasses in public spaces (social acceptability) and who believed that the perceived benefits of using smart glasses outweigh their perceived privacy problems with product use were more likely to be interested in purchasing smart glasses. |

|

Study 7 |

Non-user respondents across smart glasses (n = 2410), AR glasses (n = 2420), and MR headsets (n = 2423) in the US, UK, Ireland, France, Germany, Italy, Spain, and the Netherlands |

In August 2023, we conducted an online survey to understand respondents’ perceptions of social acceptability across key product contexts (smart glasses, AR glasses, MR headsets). The survey included measures of privacy perceptions and self-reported interest in purchasing products. |

Respondents’ confidence that a device would have features that can protect privacy was correlated with greater trust in tested companies (p < .05) and stronger intention to buy a device from those companies (p < .05), including from Meta. |

|

Study 8 |

Phase 1 n = 1,521 and Phase 2 n = 1,510 non-rejectors (those who are at least somewhat open to use) of voice assistants in the US |

To identify drivers of trust in voice assistants, in late 2022 and early 2023 we conducted two phases of survey research that included measures of privacy efficacy, trust, and intention to recommend the voice assistant to others. |

Survey respondents indicated that company reputation was the most important; however, modeling and analysis of latent variables in Phase 2 revealed that privacy efficacy was a key driver of trust and likelihood to recommend respondents’ preferred voice assistant to others. |

These findings indicate that products that increase privacy efficacy may increase trust and unlock purchase and use of AR/MR tech. This is consistent with external findings that privacy and trust are relevant to people’s acceptance and adoption of new technology (Aslam, Siddiqui, Arif, & Farhat, 2022; Fernandes & Oliveira, 2021; Kowalczuk, 2018; Liao, Vitak, Kumar, Zimmer, & Kritikos, 2019; Vimalkumar, Sharma, Singh & Dwivedi, 2021).

One important caveat is that the results were correlational; we can’t determine whether privacy efficacy caused respondents to trust and use new tech devices, or whether these measures were correlated for some other reason (e.g., users of this type of technology already have high levels of privacy efficacy). Future research can explore a longitudinal link — for example, by offering additional education that increases device users' privacy efficacy and then evaluating whether that subsequently leads to increased trust and use of new tech devices.

Based on this research, we built a Trust Framework to help technology companies understand how privacy and trust play a critical role in people’s relationship with, and use of, new tech like AR/MR products.

An important callout is that proactively designing experiences that address perceived privacy problems can, but may not always, result in near-term effects on adoption and use of new technologies. This is because of the nature of privacy experiences (e.g., privacy experiences are highly contextual, may resonate with specific user segments more than with others, may cause tension with other aspects of the user experience, and so on).

Meta’s consumer hardware teams have adopted the Trust Framework to build innovative experiences that support users’ privacy. To help other organizations implement the Trust Framework, we’ve included questions from the Privacy Efficacy & Perceived Problems Survey (“PEPPrS”) we designed in the Appendix. These questions can be adapted to measure privacy efficacy and perceived privacy problems for other products or services.

Implications

The Trust Framework indicates that companies that build new tech devices should offer resources that address people’s perceived privacy problems to build privacy efficacy, earn trust, and support device use.

Based on this idea, Meta has developed resources for product teams, consumers, and experts:

- Meta’s responsible innovation principles are foundational to product development in hardware, and guide how we build our products at every stage of the product development process.

- On the websites for Meta Quest (link) and Ray-Ban Meta smart glasses (link), Meta publishes information for users and other stakeholders about privacy protections and controls that support the user experience.

- Meta collaborates with academics and policy experts to publish whitepapers on privacy considerations for new tech (e.g., Bystander Signaling for Smart Glasses, Mixed Reality).

These efforts help varied audiences understand how Meta’s AR/MR teams design for privacy as the industry builds the next generation of technology. Ensuring that we build a future where we protect people’s privacy is paramount to responsible innovation, and is a core part of Meta’s vision.