Summary

This interim update is part of our commitment to keep external stakeholders informed about the progress of our global co-design initiative. We are sharing this update with expert session participants and stakeholders from governments, academia and non-governmental organizations.

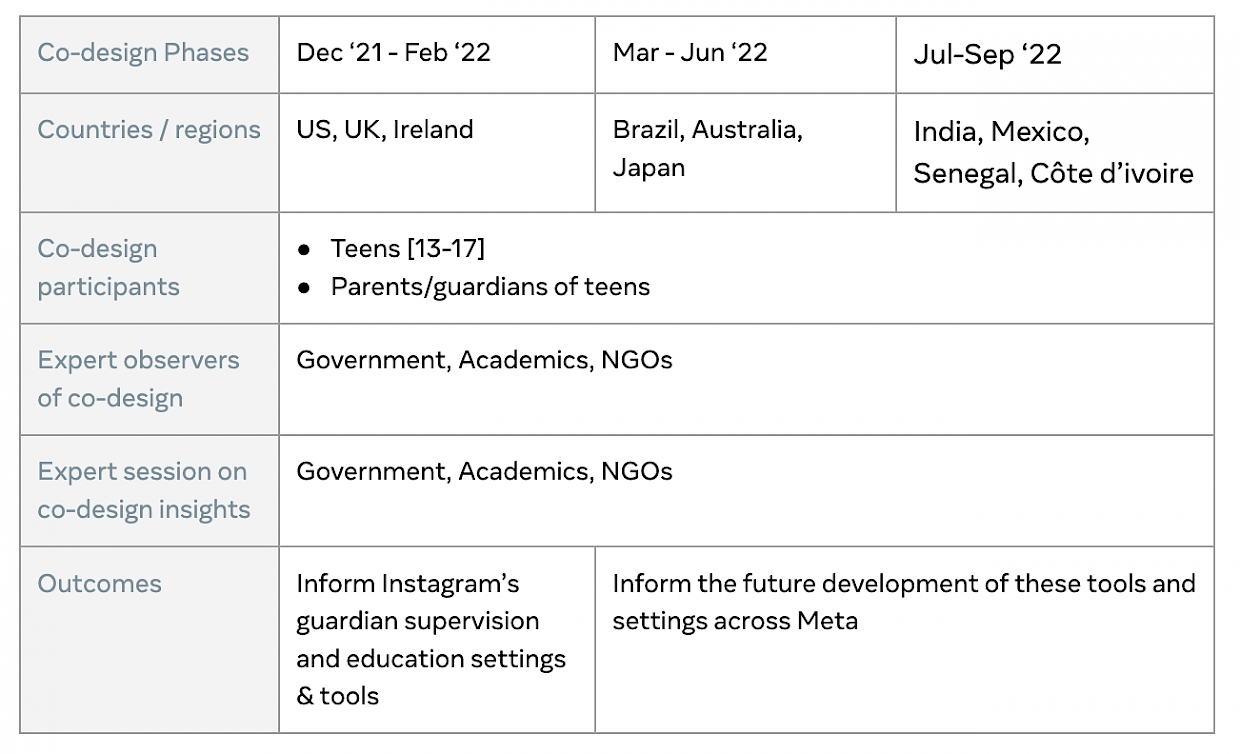

This update includes high level insights from the six countries to date in which we’ve paired co-design sessions with teens and parents/guardians with an expert consultation session. So far as part of this initiative, we've conducted co-design sessions with 103 teens, 92 parents/guardians and consulted with 87 experts.

This update also points to 2 new articles published on the TTC Labs website this month. The first article explores some of the ways in which Meta applies the “Best Interests of the Child Standard” when designing digital experiences for young people. The second article explores our approach to youth meaningful participation by developing a co-design methodology with global youth and families using our products as part of our research to inform the development of Family Center and Education Hub.

Background

As we work to support young people and their families across Meta technologies, we’re committed to learning from teens, parents, guardians and experts. Through our global program of co-design and expert consultation, we use a multidisciplinary research approach that invites people to participate as collaborators in our design process, empowering them to have their say and ensuring our products meet their needs.

Over the last six months, we’ve built on TTC Labs’ multi-year effort to design for young people's safety and privacy across Meta technologies. Through our partnership with Smart Design, we’re conducting a phase of co-design sessions with teens and parents and consulting with experts around the world.

This co-design and consultation initiative is driven by our goal to provide safe and empowering experiences for teens as they learn, create, play and interact online. Our approach to supporting families is grounded in children’s rights principles and the UN’s best interests of the child standard. Regulations such as the ICO’s Age Appropriate Design Code and the IDPC’s Children’s Fundamentals are also foundational to how we build privacy and safety into our technologies. These principles and standards emphasize the importance of providing safe, age-appropriate digital environments for youth that promote their emerging autonomy while also recognizing the roles, rights and responsibilities of parents. They also underscore the need to build for diverse families globally, considering different household situations, abilities and forms of care and equal and effective access to technology.

Our program is focusing on topics related to parental supervision and teen autonomy, as well as household digital literacy and social media education.

While building tools for all teens (aged 13-17), we’re hearing from parents and young people themselves that early teens are in a unique transitional phase as they are just beginning their social media journeys and are learning how to develop positive habits around technology. We’re also learning that teens and parents aspire to have a relationship based around trust, transparency and communication. That’s why we’re providing parents with insights that can support targeted conversations and open dialogue around social media.

We’ve held sessions with teens and parents in the US, the UK & Ireland, Brazil, Australia and Japan, with further engagements planned in India, Mexico, Senegal and Côte d'Ivoire, to inform how we develop parental supervision tools and settings. While we’ve conducted user research with young people and parents for a long time, this program offers an additional collaborative space to design with young people, caregivers and families, hearing their voices and taking their needs into account by co-creating with them.

As we expand and iterate on this program, we will broaden our focus to include a wider set of safety, privacy and wellbeing-related topics. We’re especially interested in hearing from a range of families on how variations in teens’ age, household structure, level of parental oversight and socio-cultural context play out in teens’ and parents’ needs and motivations.

These co-design sessions are paired with consultations with external experts from government, civil society/NGOs and academics. We’re engaging a range of individuals and organizations dedicated to holistic, youth-centered development that provide direct services to community-based youth groups across sectors, including education, health and wellbeing, digital literacy, privacy, and safety. The program is also piloting new collaborative models, such as inviting these expert groups to nominate teens and parents from their communities, observe the research and participate in a session to provide feedback and explore co-design insights and dilemmas together.

Goals & intended outcomes

The main goals of the initiative are to:

Elevate the voices of young people and support families through meaningful participation in the Meta product development process

Expand and deepen consultation and partnerships with experts to guide Meta product development decisions & processes

Communicate regularly and transparently how external feedback from teens, parents/guardians and experts guide product and policy development

A cross-functional, global Meta team including youth research, policy and product are leading this initiative focused on co-design as a meaningful method to engage with teens, guardians and experts throughout the product development process for Family Center and the education hub. Consultation, collaboration and co-design reflect a combination of priority focus areas we’re addressing across Meta products and services:

Safety, privacy and wellbeing across the family of Meta apps

Parental/guardian supervision and teenagers’ autonomy

Teen/guardians digital literacy and social media education

Age-appropriate experiences

The main outcome of this initiative will inform the continued development of Meta’s guardian supervision tools, age-appropriate safeguards and an educational hub for parents and guardians. Additional outcomes include: (i) insights from teens and parents/guardians help drive products and features that meet their needs and are in the “best interests of the child”; (ii) expert feedback used to inform the development of guardian supervision and teenagers’ autonomy, teen wellbeing, and teen/guardians social media education settings and tools across Meta, including Instagram and Reality Labs; (iii) develop new and strengthened feedback mechanisms and collaborative spaces between youth product, research and policy teams and experts from civil society / NGOs, academia and government.

ARTICLE: How Meta applies the "best interests of the child" standard to designing digital experiences for young people

At Meta, we have developed a framework to help us apply the UN’s Convention on the Rights of the Child directly to the products and experiences we build. We complemented our own internal research with input from global data protection regulators to create the Meta Best Interests of the Child Framework, which distills the “best interests of the child” standard into key considerations for product teams to consult throughout the product development process.

This article provides more information on each consideration that is part of the Framework, including a subset of questions, resources and case studies.

Learn more

Co-design & consultation activities

Following the launch in March of Family Center and the next phase of its global rollout in June 2022, we are conducting co-design and consultation in additional countries to help guide future product development of guardian supervision tools and settings across Meta, including Instagram and Reality Labs, by focusing on better understanding the diverse needs of teens and families. This initiative is part of a wider range of activities:

Co-design sessions with (1) teens [aged 13-17] and (2) parents/guardians followed by expert sessions exploring the insights and challenges surfaced through co-design

Expert fora as part of global, regional and country-driven initiatives, such as the TTC Labs global Youth Summit, Meta’s Youth Advisor program, regional Privacy Data Dialogues, and Instagram’s Policy Programs partnerships, among others.

Producing guidance, insights and learning resources around how to design for privacy, safety and wellbeing across digital experiences for young people and family contexts, for Meta teams and for external audiences through expert collaboration.

Communicating progress on how these insights and feedback may inform the development of Meta products.

Co-design methodology

The initiative’s methodology focused on co-design with teens aged 13-17, parents/guardians of youth aged 13-17 and consultation with experts dedicated to youth privacy, safety and wellbeing from civil society, academia and government.

The methodology was developed jointly with Smart Design, a human-centered design and innovation consultancy with deep co-design expertise. It combines qualitative research through co-design in an initial set of pilot countries (see further below) coupled with consultations with experts from NGOs, academic institutions and government agencies. Co-design is a method that can generate relevant insights and we are focussing on (1) learning about co-design as a meaningful participation method for engaging youth and families in the product development process and (2) advancing knowledge on youth safety, privacy and wellbeing to inform product principles and guidance, as well as and global policy development.

ARTICLE: How we’ve used co-design to develop parental supervision tools at Meta

We focused this first phase of the co-design program to help us explore key questions relevant to the design of Family Center, including how to:

- Design for the diverse needs of teens, both within and across age groups

-Account for vulnerable groups who might have particular privacy and safety needs

- Enable young people to learn from peers, parents/guardians, and other trusted adults

- Promote youth autonomy while considering the rights and duties of parents/guardians

- Recognize and engage global youth and families using our products

This article outlines a few ways in which we developed co-design tools to enable the meaningful participation of teens and parents/caregivers in our research to inform the development of Family Center and Education Hub.

Learn more

High level summary of co-design and consultation insights to date

We’re providing a high level overview of some of the co-design and consultation insights for guardian supervision and teen autonomy across countries. Note that we’re planning to develop a report to be published by the end of 2022 that will detail our co-design approach and synthesize research insights into usable frameworks and wider learnings.

🇺🇸 US key insights

We held paired engagements:

90min co-design sessions with participants including 30 teens aged 13-16 and 28 parents/guardians - 6-9 Dec

120min insights session with 19 experts from academia and civil society - 19 Jan

Key insights from co-design participants in the US:

👀 Teens are amenable to parental supervision and oversight when it allows them to maintain a sense of control. Teens were open to parents having visibility into some aspects of the social media use, as long as they can remain “in charge” of decisions like who they follow and when/how long they use the app. Over time and as they demonstrate their maturity, teens want parents to do less setting of hard limits and move into a phase where they can periodically see what their teens are up to (e.g. screen time insights).

📈 Teens also welcome supervision when it offers them clear value and a pathway to independence. Teens are looking for ways to demonstrate to their parents that they can handle greater responsibility online. They see supervisory tools as useful when they can be used to prove to their parents that they should be granted new freedoms or responsibilities, not as something that is static or inflexible.

😤 Some types of supervision are considered too restrictive by older teens and likely to drive them to create second accounts and reject parental oversight entirely. This includes features that inhibit teens’ ability to make decisions for themselves (highly restrictive screen time limits, approving followers/following, blocking content) or features that invade the privacy of others (e.g. reading DMs, accessing friends’ profiles).

⏰ Parents are time constrained and want straightforward ways to keep their teens safe. They're not interested in the constant monitoring of their teen’s account. They expressed the desire to be notified by the platform only if something out of the ordinary were to happen or there’s a concern for concern. They wanted additional information to help contextualize insights about their teen’s social media activity.

🎯 Parents want tools to have more targeted conversations with their teens and that can make those discussions around social media less adversarial. Social media can exacerbate underlying issues around communication and trust between parents and teens. Many parents struggle to know when they need to have a conversation with their teen about social media, and exactly what they need to talk about or how to approach it. Parents felt that it would be helpful to have an objective starting off point to know what topics warrant further a discussion.

High level insights from the US expert session participants:

Rethinking the approach to parent/guardian supervision surfaces and settings

Experts from academia and NGOs underscored the importance of putting teens in the center and were especially sensitive to language around "controls,'' which they perceived to refer to something top-down, inflexible, and parent-centered with too high a focus on adults getting young people to “do what they want them to do” instead of facilitating conversations that pave the way for teens’ evolving autonomy.

Experts in the session argued that the approach to guardian surfaces and settings should be framed from the lens of education, not control, in order to establish a path towards self-supervision and teen-guardian trust-building.

The best case scenario is that teen-parent/guardian relationships are based on trust. There are many stimulating factors that can influence how parents perceive their role. It’s important to take a step back to understand these influences. Experts noted that some research has found that parents feel social pressure to appear more strict than they actually are (seen as a sign of being ‘a good parent’), in terms of both peer pressure and social media pressure that can also influence their parenting practices.

Teens may be more likely to accept the rules if they’ve had a part in setting them — where teens can hold parents accountable to shared/family/household goals. Experts highlighted the opportunity to encourage reciprocity through co-learning, co-creation and facilitation of conversations.

Designing for multiple guardianship models, accounting for context and clarifying goals to guide decision-making.

Frameworks can be a helpful starting point to understand teen-guardians relationships and parenting practices. However, experts highlighted that they can be also limiting as specific context and cultural surroundings are not taken into account. Parenthood is context-sensitive and parenting practices fluctuate across a spectrum, not necessarily remaining in one type.

Experts emphasized that multiple guardianship types, networks of care and non-traditional “nuclear” families should also be accounted for.

Experts considered parent mediation models, where strategies may fluctuate and shift over time and in different contexts, encompassing restrictive (rule setting), active (communication & engagement) and co-use (potentially drawing on video game models).

Equally important is to understand why parents resort to either of these mediation strategies: understanding their motivations and practices through specific examples, including if they are oriented towards promoting teens’ autonomy or not.

There is currently no ‘value judgment’ placed on the guardianship typology. If this is aimed at guiding decision-making, however, experts noted that it’s important to be clear about the overall goals. Moreover, different goals, such as designing for guardian-child trust-building, individual teens’ wellbeing, or improved trust in Meta’s products for youth may yield different outcomes to inform decision-making. Consider defining universal goals, outcomes, use cases and features over solving for a particular parent/guardian typology.

Emphasizing tools and in-app learning experiences to be places for youth understanding, evolving autonomy and self-learning

Experts argued for teen-centric tools and in-app learning experiences designed for and with youth, with stronger consideration given to the need for identity exploration and the challenges this creates. Tools should help teens to amplify safe and healthy practices, and promote more positive experiments and challenges.

Experts argued that there is a need to focus on meaningful social media use rather than on screen time limits or one-size-fits-all settings. For teens, the tools should prompt them to understand their social media habits and encourage critical thinking and self-learning. Screen time insights must provide a clear value to teens and be framed in ways that resonate with their goals.

For parents/guardians, experts underscored the need to consider 1) variations in literacy and digital literacy skills; 2) time constraints; and 3) value they get from supervision. The tools should provide them with aggregated insights that help guardians to have positive conversations with teens.

Experts were also supportive of the distinction between early and late teens and providing guardians with more options to receive insights or notifications in the case of early teens. In addition to giving early teens a way to show their parents/guardians that they’re behaving responsibly online, experts pointed to safety reasons, such as having the guardian act as an “emergency contact” when a teen reports something, gets blocked or blocks an account. Some experts, however, highlighted the need to foresee unintended consequences. The recommendation was to give teens the choice to enable guardian supervision with specific features designed to promote constructive conversations while reducing the barriers to information sharing from teens to guardians.

Customization is important as needs vary across individuals and family contexts. Tools should be flexible and above all, encourage communication between teens and guardians. Educational resources and tools should be customized based on maturity levels more than age.

Teen, Parent, Family is of course an essential framing, but consider that social media’s influence is societal (on and off digital). There is an opportunity to help set prosocial behavioral norms by uplifting others and encouraging empathy.

Experts provided a range of recommendations regarding the use of in-app tools to support teens’ education and self-learning, such as:

Experiential learning models

Pop-ups, signposts and positive challenges in collaboration with creators.

Peer-to-peer education.

🇬🇧UK & 🇮🇪Ireland key insights

We held paired engagements:

90 min virtual co-design sessions with 22 teens aged 13-18 and 20 parents/guardians - 24-28 Jan

120min virtual session with 22 experts from government, academia and civil society for feedback and consultation - 16 Feb

Key insights from co-design participants in the UK & Ireland:

🎯Both parents and teens aspire to a relationship based on trust and communication. Parents want more actionable insights in order to have more targeted and productive conversations with their teens.

🤝Parents used a combination of rule setting, monitoring, and communication to supervise their teens. How they determined their approach was based on (1) level of awareness around their options to monitor; (2) perceptions of the risks of social media; (3) personal relationships or experiences with social media; and (4) level of trust between parent and teen.

⚖️ Teens want tools that help them prove maturity and gain trust. When the level of oversight is too high for teens, they often feel like they are not trusted. Teens are looking for ways to show their guardians that they can handle greater responsibility, either through proof points or a well-articulated plan.

👥Parents want to be related to, not advised. Instead of tips or advice, parents would rather hear from others about how they navigated similar experiences. Educational materials should present options and ideas rather than strict “how tos” to account for different situations and guardian-teen relationships.

🥅Teens are more likely to self-monitor in response to intrinsic motivation. Teens are more likely to self-monitor when it offers them direct value and helps them accomplish their goals. These motivations include presenting themselves well to others (e.g. helping them secure employment), helping them focus or accomplish tasks (e.g. getting better sleep or not procrastinating) and improving mental health or combatting low self-esteem.

🤷♀️Knowing the technology gives guardians a leg up on supervision. Parents who wanted to play a larger role in supervising their teens online told us their lack of knowledge around how the platform worked was their biggest barrier.

High level insights from the UK & Ireland expert session participants:

Balancing guardian supervision and teen autonomy

Focus on early/older teens. Experts agreed that highlighting family-oriented surfaces to early teens feels right. Parental settings are always recommended for guardians with younger children, but for teens, it becomes more important to empower parents to have better conversations rather than rely only on controls.

Building an ecosystem of family controls, settings & supervision. Experts suggested that Meta should work to create a positive digital environment that fosters healthy habits by default through multiple mechanisms rather than “on/off switch” for supervision. As children gain autonomy, they continue to seek support, but start using the tools provided by the organization. They’re still learning to navigate social media albeit relying less on their parents for guidance.

Develop a holistic, risk-based approach when refining supervision defaults and self-monitoring settings and tools. In line with the notion that supervision is not an on/off switch, experts recommended approaching the iteration of supervision settings to consider a broader set of indicators beyond age, e.g. period of time a young person has been using the app, anomalies indicative of behavior changes, etc.

Access to “smart” family insights. Experts suggested that providing parents/guardians with access to “smart” insights compared to all info about their teen may be preferable as it can be more actionable for them and help to protect youth privacy.

Opportunities for fostering healthy social media habits and education

Parent education. While many parents and guardians feel like their teens are already ahead of them when it comes to social media skills, others are personally very familiar with how they work, but lack insight into how their teens' experience might be different. Experts suggested that there's a continued opportunity for product makers to provide parent/guardian education about platform and teen experiences.

Supporting conversations. Experts suggested that parents’/guardians’ lack of empowerment with regard to their children’s social media use may stem from their lack of confidence about how to approach monitoring and conversations with teens. For older teens, experts suggested there is a need to further define the types of conversation for which to optimize - consider teen typologies to complement the parent typologies suggested by the research findings. Experts argued that conversations usually happen when something has already gone wrong, usually starting at a “recovery moment”, but what might earlier interventions look like?

Expiration date for settings. Experts suggested that there’s an opportunity to prompt guardians and teens to re-evaluate their experience over a period of time. A young person’s experience online is not static; they might need more or less support at different times.

Peer-to-peer support. Siblings and peers are a vital source of support for teens who can’t speak to an adult, however experts argued that U18s should not have access to supervision settings due to legal and privacy concerns. Additionally, there is an opportunity to leverage the research finding that older teens are paternalistic about younger teens. Education and peer support from the voices of older teens could resonate with younger teens, and empower older teens to become role models.

Teen education. Partnering with creators and influential voices can be an effective way to engage teens, but it should be done in a more casual manner, according to experts. Teens respond well to relatable stories that explain what the risks are, instead of stories that are meant to scare them. It should highlight that seeking help is a positive action for teens. Consider investing in up-front transparency, checkups and other educational approaches e.g. accounts that teens automatically follow or year in reviews.

Early education for parents and U13. Experts suggested there was a need for product makers to find ways to prepare both parents and teens ahead of 13yrs. It’s important to better understand at what points parents and children start navigating this experience together and how these conversations take place. How might we provide support to these conversations and parents’ preparedness to have them?

Wider role of co-design. Experts emphasized that companies should be working closely with teens to understand what success looks like from their point of view, following up with teens to understand what works and what doesn’t, as well as working with youth and experts to develop metrics for empowerment and understanding (e.g. do older teens feel more positive about their online experiences over time?)

🇧🇷 Brazil key insights

We held paired engagements:

6 x 90 min virtual co-design sessions with 16 teens aged 13-18 and 16 parents/guardians - 24-28 Mar

120min virtual session with 15 experts from government academia and civil society for feedback and consultation - 26 Apr

Key insights from co-design participants in Brazil:

🧫Key cultural differences

In Brazil, the extended family (siblings, godparents, aunts, uncles) and the local community share more of the responsibility in supervision than we've seen in other countries. When it comes to assigning supervision duties via Family Center, the line is drawn at members of their own household (which could include siblings or cousins). However, parents often look to their neighbors and friends for support in keeping an extra eye on their teens in less formal ways.

While worries over inappropriate content, stranger danger, and screen time remained present, concern around theft, scams, and hackers were particularly heightened in Brazil for both guardians and teen participants.

More so than other countries, we heard from Brazilian parents that they were inclined to go “above and beyond” in monitoring their teen and taking direct action to keep them safe, either because they find technological solutions ineffective or slow or because they are not sure they can rely on it. They need more reassurance that the platform is doing its job at protecting their teens or more education around the existing actions they can take.

🪡 Customization

Parents want certainty that the platform will get their attention on high-priority matters and allow them to customize everything else.

Guardian participants in Brazil are also mindful of invading their teens' privacy but believe they can de-escalate these interactions by having objective “third party” information from an app as a starting point for a conversation. Both teen and guardian participants discussed how some of these settings and controls might actually help to make conversations and interactions around social media less adversarial.

Guardians prefer standardized settings when it comes to high-priority safety matters so they can feel confident they are not missing anything important. For example, they want the app to take a stance at filtering out violent, sexual, or explicit content that is not suitable for teens; they want to receive alerts for suspicious followers or DMs; and they want to receive alerts if their teen has reported something.

On the other hand, they are looking for a more customized experience when it offers them a chance to apply their personal values, preferences and approaches to parenting. This includes customized screen time controls (they want to be able to set custom limits based on their teens schedule or time of year), keyword alerts (they may have specific concerns relating to their teens they want to monitor more closely), personalized educational content (they only want to receive resources and tips on topics relevant to their situations) and how and when alerts are received (have differing preferences on the frequency and best channel for alerts)

🎗Teen support networks

Both teens and guardians participants in Brazil indicated they rely on a range of resources for guidance, emotional support, and intervention when more serious issues occur. There is an opportunity to bridge the gap between insight and action by helping teens and guardians communicate better.

During the Brazil sessions, guardians and teens discussed their “support networks” in terms of guardians, authority figures, friends and peers, and older siblings and teens. Participants also discussed the types of support they expect or receive which we grouped as: supervision or monitoring, practical guidance on a specific topic, emotional support related to wellbeing, and intervention in the face of a serious threat.

Teens turn to different sources depending on what type of support is needed. This suggests we should empower teens to seek help from a range of different actors beyond the platform and parents.

Teens seek support from the platform when they are determined by themselves to set boundaries, monitor their use in order to quickly resolve an issue on their own.

Teens seek support from guardians or family members when they need guidance on a difficult issue, reassurance that they are behaving appropriately, or need intervention in the face of a threat.

Teens seek support from older siblings or older teens when they need advice from someone who has gone through a similar experience or tech help using the app.

Teens seek support from their friends or peers when they need empathy and camaraderie in dealing with issues a grown-up might not understand. Friends provide boosts of confidence and encouragement.

High level insights from the Brazil expert session participants:

Balancing parental supervision with teen autonomy

Experts valued Meta's approach to balancing parental supervision with providing a baseline experience that both empowers and protects teens, while considering their evolving autonomy.

There was general agreement on prioritizing the needs of early teens arguing this is a critical stage of identity development and best suited for risk prevention. Younger teens are more vulnerable to low self-esteem insofar they are going through a fast growth period that poses challenges to understanding their identity.

Experts noted that parent and teen relationships around social media are often adversarial, and teens may not feel comfortable talking to their parents about their problems and concerns online. This was already the case pre-pandemic (an expert referred to the IBGE 2019 survey on adolescents’ health which showed that more than 30% of adolescents “do not consider their parents can understand their problems and concerns.”

Inspiring teens to self-supervise and monitor

Experts were cautious about teens’ motivations to opt in to parental supervision. The question “how can parents mentor/supervise their children without the risk of alienating them?” was posed. The recommendation was to approach supervision from the teen’s experience as a motivation coming from them – framing it as a “collective attitude”.

It was suggested to identify a few key ideas on how each insight/feature might attract teens’ uptake in a way that aligns with their goals, motivations, and interests in using the app. The app should offer a tool that allows the family to share usage, tips, challenges and perceptions to assist in the mediation process. This would facilitate teens’ uptake in a way that is fun/cool to foster family games and, in turn, help both teens and their guardians move towards trust-building conversations about social media use.

Supporting guardians and teens beyond supervision

Experts welcomed using the Family Center and Education Hub as channels for educating guardians. The discussion underscored (i) moving away from a “supervision/control” narrative towards communication and mentoring, and (ii) investing in multi-channel dissemination of Family Center’s availability, albeit with an eye towards guardians with limited tech accessibility and low literacy levels.

Experts suggested exploring two main ideas in response to the findings about Brazilian teens’ support networks and the platform’s role:

Teens designate a “trusted contact” that is seen as a “mediator” between them and their parents. The co-design sessions showed the prevalence of the extended family [godparents; aunts/uncles; close family friends] in informal supervision and mentoring roles on social media use. While guardians generally agreed they don’t want “too many cooks in the kitchen” in formal supervision roles [custom permissions], they underscored their reliance on their social/family networks for child-care and solving difficult situations with their teens (e.g. bullying; unwanted contacts by strangers in app; etc.);

A feature to provide teens with easy, direct access to on-offline support resources. One expert suggested creating a simple protocol -similar to existing ones in Brazil for healthcare emergencies via phone- to respond to teens seeking resources or in risky situations. Other experts agreed with this suggestion.

Experts agreed with the finding from co-design sessions about teens often feeling dissatisfied with reporting tools, for example, as a means to effectively address issues that concern them, such as bullying.Experts cautioned about having a tool that does not (i) offer the teen enough space to provide context, and (ii) get back to them with follow-up information or a resolution. In their view, reporting without meaningful feedback generates frustration and may reinforce the idea of a “lawless internet” or an “imaginary audience” that can negatively affect teens.

Experts emphasized the importance of following a multi-pronged approach to promoting both guardian supervision and teen’s self-monitoring. Parents and teens' needs are distinct. There is an opportunity to use tools to meet teens where they are coupled with public communication to target guardians.

Experts suggested scaling up investments on multi-channel campaigns to promote the tools’ uptake by reinforcing two-way communication and support roles: “teens teaching parents about social media and parents teaching teens about online safety.”

Experts indicated that the tools must be as appealing as the platform, and referred to the ongoing Instagram-led safety journey for teens campaign with Safernet and Instituto Vita Alere as a good example of a “universal language” that doesn’t infantilize teens.

🇦🇺Australia key insights

We held paired engagements:

6 x 90 min virtual co-design sessions with 11 teens aged 13-17 and 12 parents/guardians - April 26, 27, 28

120min virtual session with 17 experts from government academia and civil society for feedback and consultation - May 11

Key insights from co-design participants in Australia:

🤳Self-supervision can be a foundation for safe and healthy use of social media platforms

Social media platforms play a central role in our daily communication through their messaging functions, especially for teens, who often use it to discuss homework and school and connect with friends and family. This can make it difficult for teens to disengage with social media completely, and setting blanket restrictions such as severe time limitations risks cutting out important channels of communication for them.

While teens want to have more control over their time online, they also want to learn how to better control what they see, and consider this important for a healthy experience. They see social media as an opportunity to deepen their interests, but find it difficult not to be pulled into the noise of their feeds, which many report includes unwanted content.

Teens often self-supervise because they are concerned with issues beyond their guardians’ immediate control, like the content and people they may come to interact with. Many teens learn how to do this through peers, and found it difficult to locate helpful resources when they were younger.

Finally, teens want to prove to their guardians they are responsible and can be trusted without forgoing their privacy. As such, collaborative tools can play an important role in supporting the relationship between parents and teens with regards to social media use

⭕️The role of trusted networks and closed circle supervision for healthy support pathways online

Both teens and guardians see closed circle supervision of known and trusted support networks as the best way to get help, and rely on these networks to look out for them and flag suspicious activity. Teen support networks often include friends and peers, older siblings, parents and guardians, authority figures, and platforms. Guardian support networks tend to include other parents, friends and family, extended community, experts and professionals, authority figures, and platforms. Each network provides different levels of support and are often chosen based on the issues at hand.

To equip themselves with healthy support pathways, guardians look to other parents and independent resources for information about how to talk to their teens. Understanding their teens’ online world helps guardians to be more informed and confident to undertake more difficult conversations around social media

🤲 Fostering teen autonomy can be achieved through defining boundaries for safe use together

Guardians see that social media use is a concern once it begins to affect their teens’ engagement in everyday activities and responsibilities. Teens want to seek help in finding the right balance of using safety features that are helpful but don’t diminish their online experience. They are concerned about the repercussions of setting their own safety features: how it might impact their feed, limit who they can interact with, and change their overall experience. There is an opportunity to help them understand the right settings for their desired experience.

Strengthening the relationship between a parent and teen, and interpersonal dialogue are at the core of effective online supervision. Parents and teens see technology as an enabler to this. With “guardrails” and agreements jointly developed, guardians can take a lighter touch to supervision based on trust and communication, turning to stricter measures if these boundaries are overstepped.

High level insights from the Australia expert session participants:

The conversation around “self-supervision” should be reframed to “empowerment” to distribute the shared responsibility across teens and their support systems such as parents, platforms, and experts.

Creating the ability to identify signals of risk and providing accessible networks of support, such as access to trusted professionals at key intervention points, can enable an ecosystem in which teens can self-supervise.

Teens want to have conversations about online autonomy and safety, however guardians often lack the know-how to facilitate this. Many guardians focus solely on risk or “zero-tolerance” approaches, despite teens’ desires to broaden the conversation. How might we normalize this conversation, and encourage having it early and often?

Experts called for a proactive approach to prevention, shifting away from the “stranger danger” narrative to a pragmatic approach that helps teens identify early warning signs and response strategies.

Creating resources for young people by young people is key in providing trusted yet relevant information from sources that teens both empathize and identify with.

The insights from these consultations continue to ground our approach to navigating guardian-teen interactions on our platforms, and help us create the right tools and resources to empower parents and teens to have conversations about healthy social media use.

🇯🇵Japan key insights

We held paired engagements:

6 x 90 min virtual co-design sessions with 24 teens aged 13-18 and 16 parents/guardians - 23-28 Apr

120min virtual session with 14 experts from government academia and civil society for feedback and consultation - 7 Jun

Key insights from co-design participants in Japan:

✋ Parent participants in Japan felt that it was very important to respect the privacy of their teens. Parents reported being more “hands off” and had a higher degree of trust in their teens to make appropriate choices online.

🤔 Teen participants also reported a cautious and mindful orientation toward social media. In line with parents’ perceptions, we found that teens were more cautious of risk than we’ve observed in other countries’ co-design sessions. There was a sizable cluster of teens who proceeded with extreme caution when sharing personal information online, posting, or interacting with others in order to avoid bringing attention to themselves.

👏 Japanese parent participants are especially concerned about their teens being “good” digital citizens. Parents we spoke with were highly concerned about harm their teens may be causing to others. With the means of expression being limited on a screen and a higher risk of being misunderstood, they worry that regardless of intention, other users may feel hurt due to their teen’s words or actions.

👥 Teen participants underscored their ability to socialize in news ways as a core value prop in Virtual Reality. Teens are socially driven and the ability to make new connections is what makes online gaming and VR so alluring. In particular, the avatars used by teens in VR help teens express themselves in ways that don't feel as vulnerable.

High level insights from the Japan expert session participants:

Consider ways to provide more intuitive family-oriented surfaces and rule-setting approaches

Family rule-setting practices from a young age for experts is a strong indicator of responsible internet use. There is a lack of information on how other families are creating rules and making them work. The reasons behind rule setting should be clearly explained to parents. Some expert participants stated that there are some parents who never set rules for their children, with a trusting mindset, and these are the teens who tend to get into problematic situations online. Experts also expressed that children with internet addiction tendencies break rules, especially between their parents and with themselves. Children who discuss the rules with their parents at a younger age, however, are less likely to violate them repeatedly.

Language and tone matters. Expert participants suggested that rules should be framed in terms of a positive rather than a negative tone for teens e.g. “Don’t use your phone after 10 pm” should be rephrased as “Let’s use your phone until 10 pm.”. Equally, It is important to give guardians an opportunity to self-reflect on their actions and provide constructive suggestions rather than calling them out e.g. "Do you sometimes do things like this? Let's try to change that a little bit.”. Consider creating spaces for parents to share stories around their experiences and what works.

Parents with lower levels of digital literacy or single-parent households may struggle to use features as effectively. Expert participants said that platforms might help parents to more effectively set the rules and have conversations, maybe even going as far as requiring limited or no decision-making for individual households with default modes and settings, which might help level the playing field across households.

Inspiring teens to self-supervise and monitor

An approach to self-supervision rooted in habit formation is important for expert participants. Teens can only reflect and self-monitor if they are objectively informed about the time they spend online, and what dangers they are exposed to. Inspiring autonomy in teens is difficult and it certainly cannot be managed by willpower and enlightenment alone.

For expert participants, many teens during and post-Covid would like more support from parents and platforms to help bring up issues, discuss and resolve them. Platforms should take more of an active role helping teens who are less likely to develop positive behaviors around internet use and self-monitor.

Create opportunities to access advice through different levels of relationships and novel methods:

Given some parents in Japan play games with their children such as digital role playing games (RPGs), parents/guardians might be able to empathize more and co-create solutions with their children.

Vertical relationships are what teens have with parents and teachers; horizontal relationships are with friends. Role models might instead be someone in a “diagonal” position who is not an authority figure but a little older with recent relatable experiences of being a teen. A technology sempai (literally meaning someone who is a few steps ahead of you in life) is someone whom teens would feel more open to talk and listen to through real-life stories and ask questions. Teens tend to accept what these figures say, even when what they are told by them is exactly the same as that of the guardian.

Mixed or Virtual Reality presents novel opportunities to access advice around responsible online behavior, including gaming. For example, experts suggested developing a VR consultation room where teens can get anonymous advice and counseling from experts in avatar form, as anonymity in a moderated space might make teens more comfortable and motivate self-monitoring. Consider building spaces in which teens feel they belong, can express themselves without fear of negative consequences and support each other. Drive awareness around the real life scenarios and relationships behind digital interactions.

Next steps

This initiative is continuing with co-design and insight sessions taking place in India, Mexico, Senegal and Côte d’Ivoire, alongside additional consultations.

We’ll continue to communicate publicly on our progress as we roll out the program to additional countries, and will publish a report and accompanying resources on the TTC Labs website later in 2022.

The co-design and consultation report will synthesize insights and explore frameworks based on our research and learnings. We will be reaching out to experts with whom we’ve already consulted as part of this project for input and feedback.

We will endeavor to continue communicating progress about this initiative with experts.

“The co-design process has clearly shown that teens like to be able to call on their parents for support and guidance, but often don't know where or how to begin. By proposing parental supervision tools across apps, Meta will help overcome this hurdle. Especially since the tools are unobtrusive, respectful of privacy, and offer the ideal training wheels for younger teens building their competence and confidence in the online social environment."

- Janice Richardson, INSIGHT

“It is really encouraging to know that Meta has been listening to young people and their parents and creating tools that encourage timely conversations. At Parent Zone, we know how difficult it can be for parents when they feel locked out of their children’s digital worlds. With these new tools, we are seeing a shift to greater partnership between families and platforms and that is an incredibly positive step.”

- Vicki Shotbolt, founder and CEO of Parent Zone