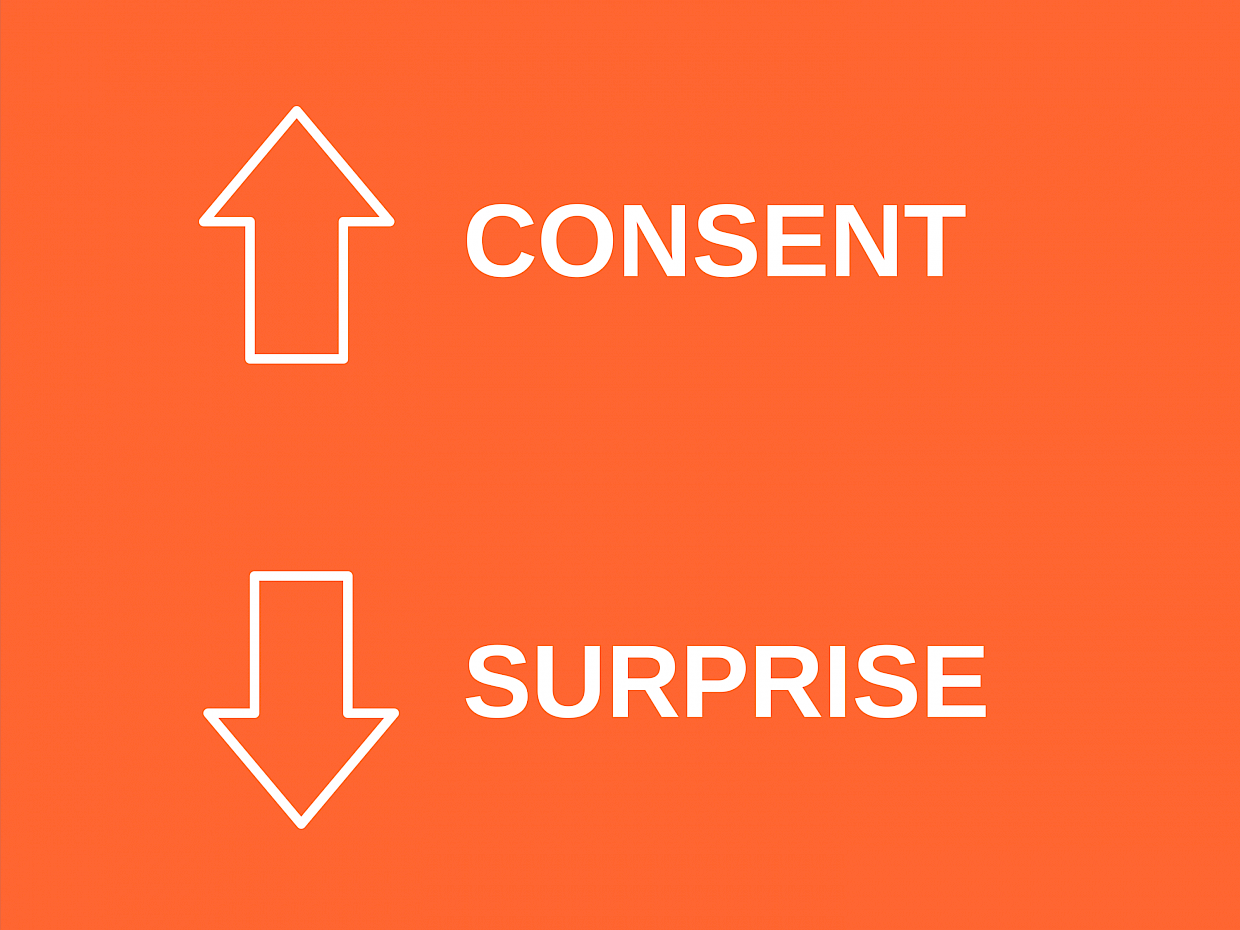

Thinking about questions around trust, transparency and control very quickly brings us to the idea of consent. That's because ethically (and, in many places around the world, legally), getting someone's consent is often an important step in processing people’s personal data.

Intuitively, consent seems to engender the idea that people should have a choice about what is done to them, and should understand what that choice means. Ideas of choice and understanding are bigger than consent, though; in fact, most people seem to agree that having choices and understanding the implications of those choices are important goals in themselves.

So why is it so difficult to design experiences that deliver what we might think of as genuine, meaningful, consent? Somewhat uniquely, consent seems to bring together several really complex issues that we refer to as 'interaction challenges'.

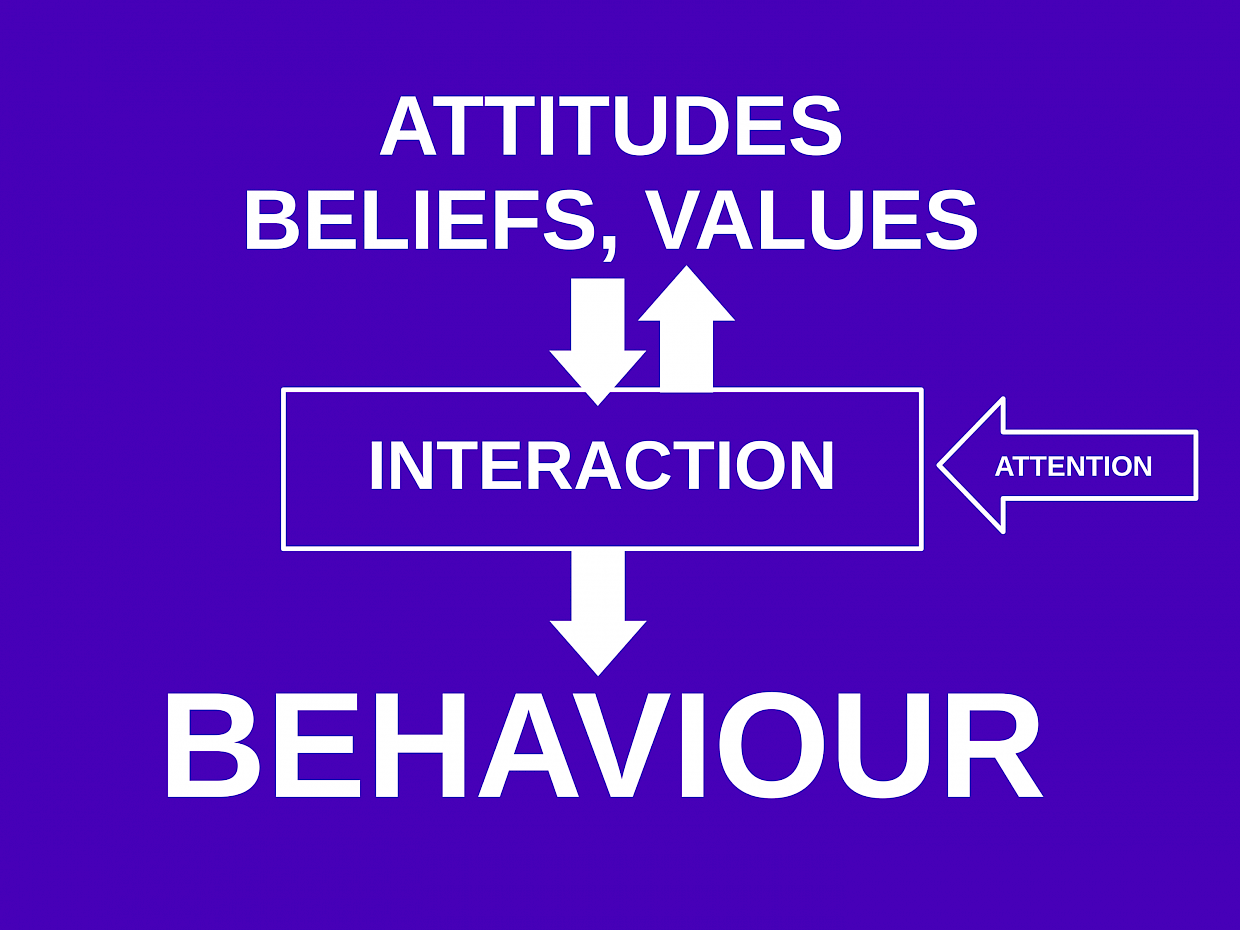

Bounded Rationality The first of these, 'bounded rationality,' refers to people’s limited capacity for making what we might call 'rational' decisions. We often see this in public health issues like smoking or healthy eating, where people often make decisions that satisfy short-term needs at the expense of longer-term interests. This also happens when we ask for people’s consent – people don’t have the attention to engage with complex decisions, and often act to complete short-term tasks even if their actions are detrimental other things they’d say they care about, like privacy.

Predicting the Future To some extent, making good decisions requires an ability to predict the future. That’s why, in a medical context, a surgeon will tell a patient about possible side effects when getting consent to perform a medical procedure. Of course, this is inherently hard and, when it comes to personal data, the consequences that people might care about are diverse, and very specific to individuals and their particular circumstances. People are well-placed to understand their own contextual concerns and risks, though; and so by bringing them the right pieces of information at the rights times, we should be able to help them make decisions that are appropriate to their own circumstances. In doing so, we should help them to be (and to feel) safe as they engage with data-driven services.

Meaningless Transparency Transparency – the idea that we should be open about how data will be collected and used – is part of privacy and data protection regulation around the world. Transparency alone doesn’t seem to be enough to help people understand how their data will be used, though. For instance, in the EU, the concept of a 'purpose' is fundamental to how personal data is regulated, but there are many ways to explain the purpose of processing, and in practice most services tend to opt for quite vague descriptions like 'to improve your experience'. As a result (and despite the sheer number of words that are provided) there’s very little meaningful transparency about how personal data is used. Even worse, the research shows that lawyers’ interpretations of privacy policies and terms of service are very different to what most people would understand, and they often don’t even agree with one another!

Breakdown Perhaps most fundamentally, the way that many companies and organisations get consent at the moment is typically pretty disruptive; we frequently tear people’s attention from the task they’re engaged in and ask them to consider complex abstract concepts like privacy. We shift their focus from the thing they’re trying to achieve, to an obstacle that we’ve put in their way. Martin Heidegger refers to this experience of a tool becoming the focus of someone’s attention as 'breakdown' and it seems to be inherently unpleasant to experience. There are some promising techniques for reducing breakdown – for instance by using assistive agents that can do some of the ‘thinking’ for us – but they’re still a few years away from widespread use.

There are some difficult tensions contained within these challenges. We want to help people avoid unforeseen consequences, but in a way that is not disruptive; we want to deliver transparency through useful and relevant information, but must also work within the limits of people’s bounded rationality; and we want to design things at scale which are useful to very diverse audiences of complex individuals.

That’s not to say that consent is intractable, though. A combination of greater digital literacy, privacy awareness and design innovation is driving progress, as the outputs from the TTC Labs demonstrate. Even where companies and organisations fall short of 'perfect' consent, good design can reduce the discomfort of the breakdowns that we create and can present people with the important facts that will form the basis for good choices. These are design challenges, not impossibilities.

Richard Gomer's subject matter expert talk | São Paolo Design Jam | August 2017

The views expressed in this article reflect those of the author. TTC Labs seeks to include a diverse range of perspectives and expert insight to encourage a constructive exchange of ideas.